This essay will explain how artificial intelligence (AI) could solve problems in the medical sector, specifically in therapy and counseling. I will first explain how AI could be implemented in therapy and counseling. Then, I will describe the problems this specific area of medicine is facing. Next, I will describe how AI can remedy these problems. I will then address counter-arguments to the proposal.

Before I begin, I will clarify which type of AI will be discussed. AI has many forms. Self-driving cars, virtual assistants, and image search engines are all areas where AI has shown its prowess. In this essay, I will focus on a specific implementation of AI known as Natural Language Processing (NLP). NLP allows computers to understand human language and provide responses to user input.

One example of the AI that uses NLP is Alexa on Amazon devices. Alexa is an NLP that listens to human speech and decides what to do based on what it hears.

OI believe that NLP algorithms can be used to alleviate problems faced by therapy and counseling services. One example of such AI is WoeBot. WoeBot is an app that uses an NLP algorithm that uses cognitive behavioral therapy techniques to support a patient’s mental health.

Therapy and counseling: current problems and challenges

Now, I will explain the problems facing the therapy and counseling services in the medical sector. One of the most significant problems facing therapy and counseling services is their cost. The cost of healthcare is a significant problem in the United States. According to the Health System Tracker by the Peterson Center on Healthcare and Kaiser Family Foundation, Americans spent an average of $10,637 per person in 2018. In other countries with healthcare systems comparable to the United States, the average person spent $5,527.

Despite the increased spending on healthcare in the United States, the United States has poorer healthcare outcomes. According to the Peter G. Peterson Foundation, in 2019, the United States had higher rates of obesity, diabetes, infant mortality, and a lower life expectancy than other OECD nations. These high costs can be a barrier to accessing quality healthcare, including therapy and counseling. One major problem plaguing mental health resources and the healthcare system overall is the cost to access these services.

Another problem that therapy and counseling services are facing is the lack of staff. According to GoodTherapy, there is a severe shortage of therapists and counselors. By 2025, it is estimated that there will be a demand for 172,630 mental health counselors, but only 145,700 mental health counselors will be available. Also, by 2025, it is estimated that there will be a demand for 60,610 psychiatrists, but there will only be 45,210 psychiatrists available.

Although an algorithm designed for therapy and counseling cannot replace medication, the statistics on psychiatrists show the widespread need in healthcare professionals whose expertise is in mental health.

Due to the COVID-19 pandemic, there has been a surge in PTSD and anxiety cases among healthcare professionals. This surge will further increase the demand for therapists and counselors. These statistics clearly show a lack of staff who can adequately treat people seeking mental health services. With a lack of staff, it may take more time for a patient to find a therapist or counselor, which creates another accessibility barrier.

Another issue the therapy and counseling services are facing is influxes due to unexpected events. The unpredictable weight the COVID-19 placed on society has led many people, especially healthcare staff, to seek mental health resources. Other unexpected events such as war or recession can also increase the demand for mental healthcare services.

The increase in demand can limit the availability of mental health resources. There is significant evidence to suggest that economic recessions can be associated with an uptick in mental health needs. According to Frasquilho et al., a literature review of more than one hundred papers shows a correlation between economic recessions and declining mental health. If a recession occurs, there will be an increase in demand for mental healthcare resources.

Armed conflicts can also spawn the same increase in mental health needs. According to the U.S. Department of Veterans Affairs, 10% of Gulf War veterans have been diagnosed with PTSD. 15% of veterans who served in Operation Enduring Freedom (OEF) and Operation Iraqi Freedom (OIF) have been diagnosed with PTSD. Approximately 1.9 million people served during OEF and OIF. Therefore, around 285,000 people who served in OEF and OIF were diagnosed with PTSD. If another armed conflict of a similar scale occurs, there will be an additional 285,000 people in need of mental health services on top of the current number of people who need mental health care.

Since the statistics do not cover the PTSD rates of civilians impacted by an armed conflict, the need is likely to be higher than the 285,000 people mentioned above. Based on these calculations, it is clear that unexpected events can increase the need for mental healthcare services. With the current lack of mental healthcare professionals, the inability of mental healthcare services to sufficiently respond to sudden changes poses a severe problem.

How NLP can tackle issues in therapy and counseling

Now, I will describe how a polished NLP service can remedy the aforementioned problems. I believe that a thoroughly developed NLP program designed for mental health would alleviate the pressing issues discussed previously.

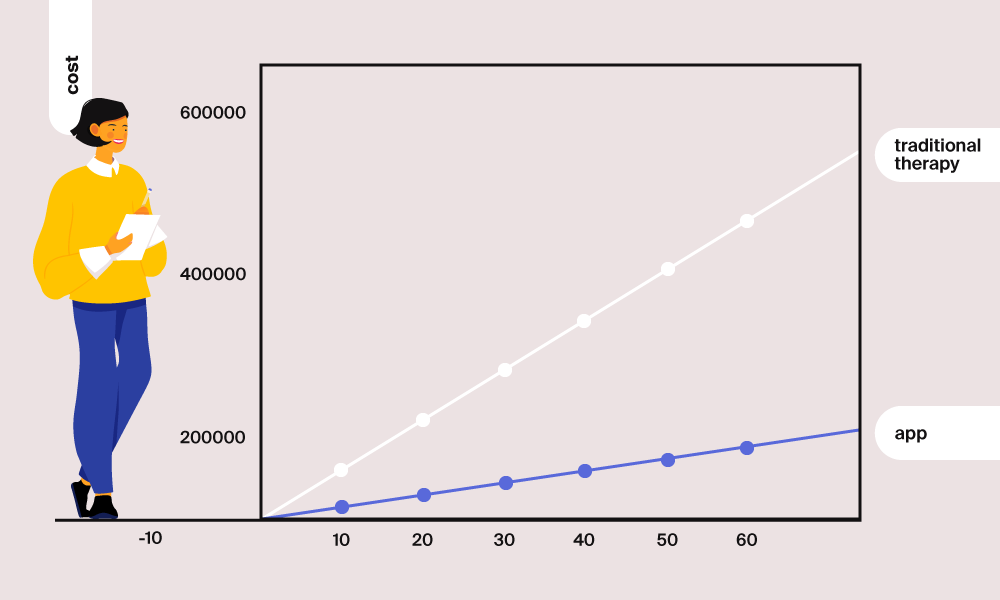

The first obstacle that was discussed is the prohibitive cost of healthcare. The price of an app and the resources required to run the app are lower than sessions with a human therapist or counselor. To prove this claim, I will calculate the costs of an app subscription compared to the average cost of attending therapy sessions once a week. For all calculations, I will assume that a patient will attend therapy for five years. I will also include a graph that shows costs for more extended periods of treatment. Medication prices are not included because AI-powered therapy and counseling services are not meant to replace medication. I will start by calculating the average cost of using an app for mental health services. I will add the costs of owning a phone with the cost of owning an app designed for mental health.

App subscription costs can vary significantly. Some apps are free, while others require a paid subscription. For example, the meditation app Headspace charges $69.99 per year. Although Headspace is not an application meant for therapy, I will use it as a benchmark for health app subscription costs. For a conservative analysis, I will assume that there is a cost for owning the application. I will use the following equation to calculate the costs of using an app for therapy or counseling:

Total Cost = Cost of Buying a Phone + ((Cost of Owning a Phone + Cost of Owning an App per Year) × Years)

The cost of owning a phone includes charging the phone, phone bills, and Wi-Fi costs.

According to Android Authority, the average price of a premium flagship smartphone model in the United States in 2020 was $1,150.83. According to CNBC, the average person spent $1,188 per year on phone bills. According to Reviews.org, the average cost of the internet was $57 per month (which is $684 per year). According to Forbes, the annual cost of keeping an iPhone with a 1,440 mAh battery charged is $0.25. Since electricity use will vary, I will overestimate the cost to $2. Based on the above formula, the estimated average cost of using an app for therapy and counseling for five years is:

Total Cost = $1,150.83 + ($1,188 + $684 + $2 + $69.99) × 5 = $10,870.78

Now, I will calculate the cost of attending a therapy session once a week. To do so, I will use the following formula:

Total Cost = ( Insurance + (4 weeks × 12 months × Cost per Session)) × Years

According to the Kaiser Family Foundation, the average annual premium for one person in 2020 was $7,470. I will assume that the therapist is in-network with the insurance provider, so the patient only pays a co-pay. According to Thervo, the lower end of co-pays for therapy is $20.

Therefore, based on the above formula:

Total Cost = ($7,470 + (4 × 12 × $20)) × 5 = $42,150

Based on the above calculations, it is clear that AI-driven therapy or counseling service is significantly cheaper than traditional human therapist or counselor.

Below is a graph that estimates the long-term cost of therapy. The graph is only an estimate. Prices may not remain consistent, and most people will probably need to replace their mobile devices every five to ten years. Also, the costs can differ based on the price of the phone.

AI designed for mental healthcare can overcome the cost barrier.

Furthermore, patients will not be prevented from mental healthcare services due to a lack of therapists and counselors. Given the digital nature of AI, multiple users can access the AI. There would be no limit to the number of users who can access the AI.

However, a therapist can only have a set number of clients. For example, if a therapist works an 8-hour workday that includes weekends and uses 2 hours per day to handle the business end of a clinic, a therapist has a total of 42 hours a week. Given that each client meets the therapist for around an hour every week, the therapist’s maximum number of clients is 42. The AI therapist would overcome the issue of insufficient therapists and counselors stated before.

For similar reasons, AI would not face staffing issues when an unexpected event causes an influx in mental healthcare needs. A competent AI running an NLP algorithm could overcome the issues that therapists and counselors face today by reducing costs and being highly accessible.

Concerns about using AI in therapy

However, there are issues with this solution that must be addressed. None of the services aiming to support mental health through NLP have directly addressed all of the concerns I will state below.

One problem asks how patient privacy will be maintained. For human healthcare professionals, regulations placed through the Health Insurance Portability and Accountability Act (HIPAA) regulate how information is stored and shared. One could ask how AI for therapy and counseling would be handled. This concern is highly realistic.

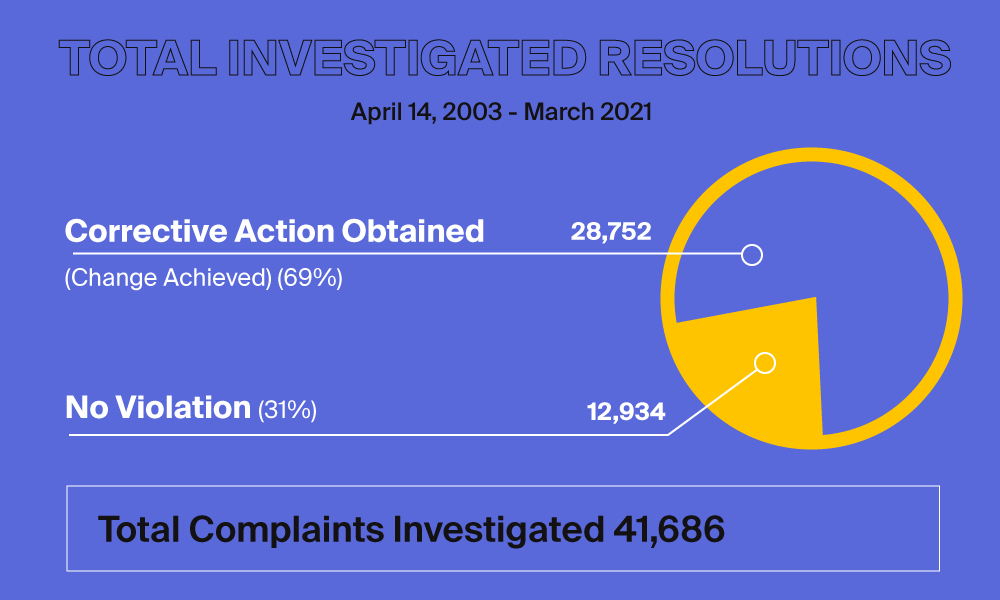

For example, on October 24th, 2020, a major mental health services clinic in Finland was hacked. The hacker (or hackers) stole healthcare data which included notes written by therapists. The hacker demanded each patient to pay €200 (around $250) to keep their information private. Furthermore, the Department of Health and Human Services (HHS) publishes statistics on privacy rule cases and violations. From April 14th, 2003 to March 2021, the HHS investigated 41,686 complaints. Of those complaints, 28,752 prompted a “corrective action”.

If we take a conservative approach and assume that March 2021 means March 31st, there were around six complaints filed per day, and 4 of those complaints would require some form of action. These examples show that privacy violations involving digital devices are a highly relevant problem.

One response to this concern examines the most common HIPAA violations by employees and how AI would not allow these violations to occur. According to HIPAA Journal, the most common violations are removing or transferring data to unauthorized locations, leaving data easily accessible, and releasing data to unauthorized entities or without consent.

AI, in its turn, can be programmed never to let these happen. AI can keep a list of IP addresses with a time frame where patient data can be sent and received. AI could also require a signature or password to gain consent from a patient to transfer data. AI could also have a timer that closes all windows that have patient info visible. Lastly, the data stored by AI can be encrypted and require multiple forms of identification for access. Since HIPAA compliant software exists, I believe it would be possible to implement the same protocols in an NLP algorithm. Therefore, the concern regarding privacy can be addressed through rigorous and careful programming.

Another criticism is about the concern of responsibility. There have been cases where AI has inflicted damage to others. For example, Microsoft developed a Twitter bot named Tay that was aimed to be a chatbot that replicated the behavior of a teenager on the internet. The chatbot would learn from other users’ tweets. However, the project was terminated after Tay started to tweet offensive content. Although the source of the issue was trolls sending Tay offensive content, the case shows that an NLP AI can say something confusing, off the mark, or offensive.

The concern in the context of therapy and counseling asks who will take responsibility if AI says something offensive or harms a patient. There are several responses to this concern. One response is that no one is responsible. Since a computer cannot be punished as a human would, there is no one to punish. Under this view, if AI causes harm to a patient, no one will be prosecuted, and there may be no compensation given to the patient. This view may be seen as insufficient, as a harmed person seems to deserve compensation but is not receiving any.

Alternatively, one could argue that the manufacturer could be held liable for the actions of the AI. One could say that this is a reasonable viewpoint because other medical devices are held to the same rule. The various medical device lawsuits can be used as a parallel case for AI. For example, the medical device company Stryker paid $1.4 billion in settlements due to defective hip replacements. Therefore, there is a notion that we can (at least attempt to) hold the manufacturer liable for a defective product.

The above example could be used as an analogy for AI that has inflicted harm. Suppose AI did inflict damage upon someone. There is a notion that we can hold manufacturers responsible for their defective medical products. Since AI is a device designed for medical purposes, and since the AI harmed a patient, we can hold a manufacturer responsible. With a precedent that manufacturers can be sued for the damage their defective devices have caused, holding manufacturers accountable for damages caused by AI seems like an acceptable idea.

Another counter-argument to the proposal is that the use of an algorithm for treatment could be paternalistic. When patients seek therapy or counseling, they will likely discuss sensitive and personal things about their lives. When a patient discusses these worries with a licensed therapist or counselor, the therapist or counselor maintains a human connection and tries to help them overcome their concerns. With the use of an algorithm, however, the human connection is not present, only simulated.

The concern is that the use of the algorithm, from the patient’s perspective, is paternalistic because it seems like their issues are downgraded to something that a computer could solve. One response to this concern agrees that the concern is valid and that the scope of AI’s capabilities should be scaled back.

AI should not be used to replace professional therapists and counselors. Instead, AI should be used as a first-responder until a human therapist or counselor can respond.Based on the above, the solution is promising, but development should proceed diligently and carefully. The problems stated above must be addressed. The development of an NLP algorithm for therapy and counseling is meaningless if it delivers more harm than help. Woebot shows significant promise. Not only is there evidence to suggest its effectiveness, but the concerns stated above like privacy and HIPAA compliance also seem to be addressed.

Conclusion

The AI that uses an NLP algorithm could abate several problems in the therapy and counseling services in the healthcare industry. One problem that could be solved with AI is the cost of accessing mental health services. The cost of insurance and the healthcare service itself is significantly higher than the cost of an app. AI-driven therapy and counseling service could abate the lack of therapists and counselors.

At the same time, some concerns must be addressed. One concern is patient privacy. The concern for patient privacy can be solved through rigorous programming. Another concern asks who will take responsibility if AI inflicts harm on a patient. One solution states that no one can be held liable. A more reasonable response is that the manufacturer of the algorithm should be held accountable, as it parallels lawsuits filed due to faulty healthcare devices. Another concern asks whether using AI for counseling and therapy would be paternalistic. One solution to this concern is that AI is not designed to replace human counselors and therapists.

Given the above, I believe that AI may be a powerful tool in therapy and counseling. With the concerns stated above, the development of such an AI must be conducted cautiously and diligently.

References

- Natural Language Processing (NLP).” SAS, SAS Institute Inc.,

www.sas.com/en_us/insights/analytics/what-is-natural-language-processing-nlp.html - 2. OPERATION ENDURING FREEDOM AND OPERATION IRAQI FREEDOM: DEMOGRAPHICS AND IMPACT.” Returning Home from Iraq and Afghanistan: Preliminary Assessment of Readjustment Needs of Veterans, Service Members, and Their Families, National Academies Press, 2010,

ww.ncbi.nlm.nih.gov/books/NBK220068/. See table 2.1 - 2020 Employer Health Benefits Survey.” KFF, Kaiser Family Foundation, 8 Oct. 2020,

www.kff.org/report-section/ehbs-2020-section-1-cost-of-health-insurance/.

See Section 1: Cost of Health Insurance - Alder, Steve. “The Most Common HIPAA Violations You Should Be Aware Of.” HIPAA Journal, HIPAA Journal, 20 Jan. 2021,

www.hipaajournal.com/common-hipaa-violations/ - Amin, Krutika, et al. “How Does Cost Affect Access to Care?” Peterson-KFF Health System Tracker, Peterson Center on Healthcare and KFF, 5 Jan. 2021,

www.healthsystemtracker.org/chart-collection/cost-affect-access-care/#item-start. - Davis, Akilah. “Therapy Demands on the Rise as COVID-19 Pandemic Hits 6-Month Mark.” ABC11 Raleigh-Durham, ABC, Inc., WTVD-TV Raleigh-Durham, 10 Sept. 2020,

abc11.com/therapy-during-pandemic-mental-health/6416039/ - Desmos,

www.desmos.com/calculator - “FAQ.” Woebot Health, WoeBot Health,

woebothealth.com/FAQ - Frasquilho, Diana et al. “Mental health outcomes in times of economic recession: a systematic literature review.” BMC public health vol. 16 115. 3 Feb. 2016,

doi:10.1186/s12889-016-2720-y - Gonfalonieri, Alexandre. “How Amazon Alexa Works? Your Guide to Natural Language Processing (AI).” Towards Data Science, Medium, 31 Dec. 2018,

towardsdatascience.com/how-amazon-alexa-works-your-guide-to-natural-language processing-ai-7506004709d3 - Helman, Christopher. “How Much Electricity Do Your Gadgets Really Use?” Forbes, Forbes Magazine, 7 Sept. 2013,

www.forbes.com/sites/christopherhelman/2013/09/07/how-much-energy-does-your iphone-and-other-devices-use-and-what-to-do-about-it/?sh=26dbf8292f70 - “How Much Does Therapy Cost In 2021?” Thervo, Liaison Ventures, Inc.,

thervo.com/costs/how-much-does-therapy-cost - Hunt, Elle. “Tay, Microsoft’s AI Chatbot, Gets a Crash Course in Racism from Twitter.” The Guardian, Guardian News and Media, 24 Mar. 2016,

www.theguardian.com/technology/2016/mar/24/tay-microsofts-ai-chatbot-gets-a-crash course-in-racism-from-twitter - “Is There a Shortage of Mental Health Professionals in America?” GoodTherapy, GoodTherapy, 26 Mar. 2020,

www.goodtherapy.org/for-professionals/personal development/become-a-therapist/is-there-shortage-of-mental-health-professionals-in-america - Kurani, Nisha, and Cynthia Cox. “What Drives Health Spending in the U.S. Compared to Other Countries.” Peterson-KFF Health System Tracker, Peterson Center on Healthcare, 28 Sept. 2020,

www.healthsystemtracker.org/brief/what-drives-health-spending-in-the-u s-compared-to-other-countries - Leonhardt, Megan. “You May Be Able to Save up to $268 a Year by Switching Your Cell Phone Plan.” CNBC Make It, CNBC LLC., 30 Oct. 2019,

www.cnbc.com/2019/10/25/you-can-save-up-to-268-a-year-by-switching-your-cell phone-plan.html - McNally, Catherine. “How Much Is the Average Internet Bill?” Reviews.org, Reviews.org, 8 Dec. 2020,

www.reviews.org/internet-service/how-much-is-internet - “Numbers at a Glance.” HHS.gov, US Department of Health and Human Services, 12 Apr. 2021,

www.hhs.gov/hipaa/for-professionals/compliance-enforcement/data/numbers glance/index.html - “Post Traumatic Stress Disorder (PTSD).” War Related Illness and Injury Study Center, U.S. Department of Veterans Affairs, 13 Dec. 2013,

www.warrelatedillness.va.gov/warrelatedillness/education/healthconditions/post traumatic-stress-disorder.asp - “Security.” Woebot Health, Woebot Health,

woebothealth.com/security - “‘Shocking’ Hack of Psychotherapy Records in Finland Affects Thousands.” The Guardian, Guardian News and Media, 26 Oct. 2020,

www.theguardian.com/world/2020/oct/26/tens-of-thousands-psychotherapy-records hacked-in-finland - “Subscribe to Headspace.” Headspace, Headspace Inc.,

www.headspace.com/subscriptions - “Summary of the HIPAA Security Rule.” HHS.gov Health Information Privacy, US Department of Health and Human Services, 26 July 2013,

www.hhs.gov/hipaa/for professionals/security/laws-regulations/index.html - Triggs, Robert. “Did Smartphones Get a Lot More Expensive in 2020? Let’s Look at the Numbers.” Android Authority, Authority Media, 14 Dec. 2020,

www.androidauthority.com/smartphone-price-1175943 - Turner, Terry. “Hip Replacement Lawsuits: Verdicts and Settlements.” Edited by Kim Borwick, ConsumerNotice.org, ConsumerNotice.org, 1 Dec. 2020,

www.consumernotice.org/legal/hip-replacement-lawsuits - “What Is Artificial Intelligence and How Is It Used?: News: European Parliament.” News, European Parliament, 29 Mar. 2021,

www.europarl.europa.eu/news/en/headlines/society/20200827STO85804/what-is artificial-intelligence-and-how-is-it-used - “Why Are Americans Paying More for Healthcare?” Peter G. Peterson Foundation, Peter G. Peterson Foundation, 20 Apr. 2020,

www.pgpf.org/blog/2020/04/why-are-americans paying-more-for-healthcare

About the Author

Lynn Serizawa

New York University (NYU)

- Field of Study:Mathematics and Philosophy

- Expected Year of Graduation:2022

- Chosen Prompt: How artificial intelligence (AI) can be implemented in therapy and counseling, what problems it solves and how it can be used to improve patients’ mental health.